We all grew up with movies of AI and it always seemed to be decades off. Then ChatGPT was announced and suddenly it's everywhere.

It's like the future arrived. What happened ? How did we get here?

Was it a breakthrough in Artificial Intelligence?

No. Not really. ChatGPT and it's kin use a Transformer Network, built on Neural Networks. Neural Nets were invented in 1943. The only recent innovation has been the use of Multi-Head Attention, thanks to a paper from Google in 2017 ("Attention is All You Need").

Was it breakthrough in Learning?

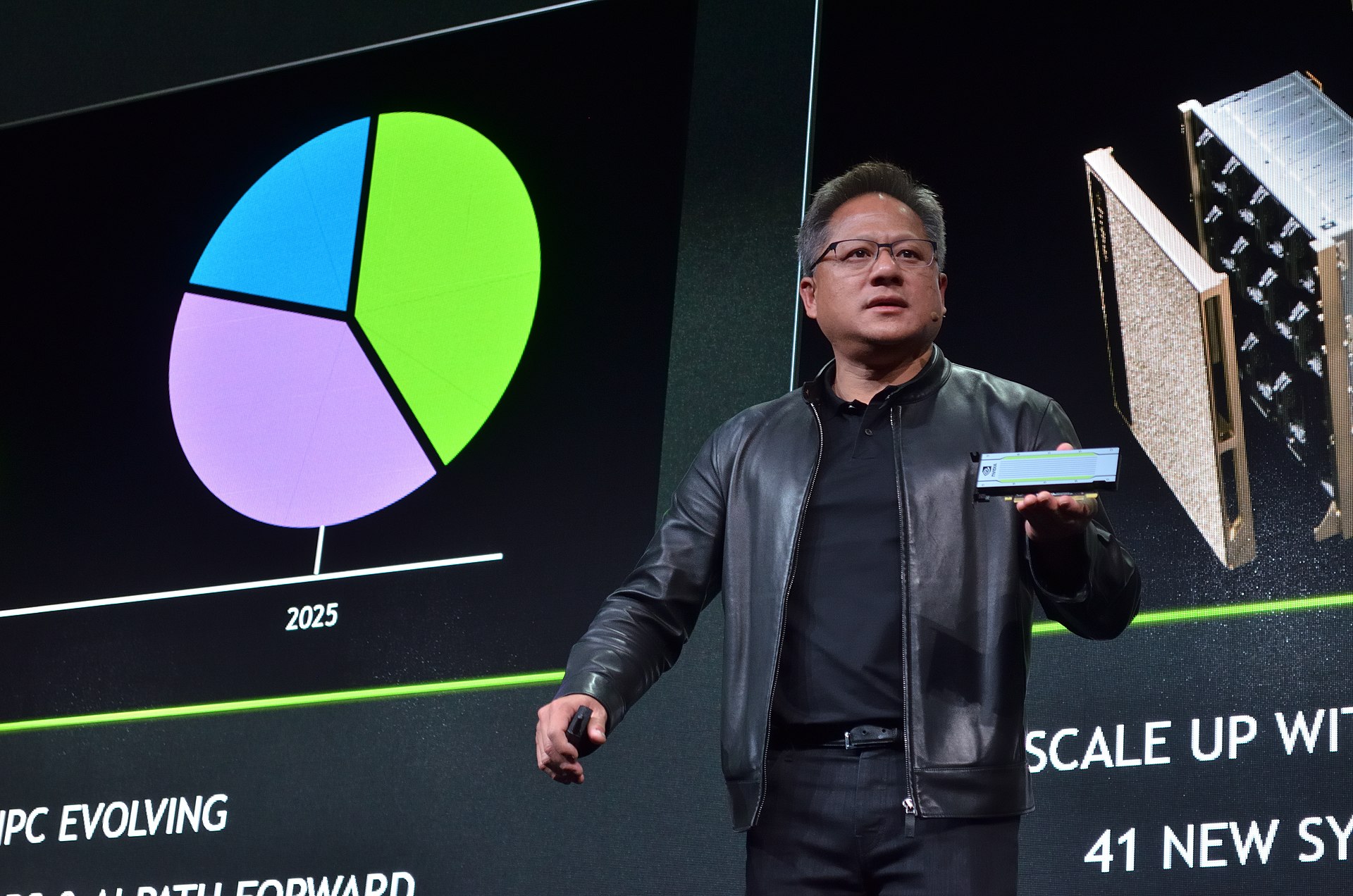

Again, no. Not really. Transformer networks are trained using data and Backpropogation. The modern version of backpropogation was invented in 1982 (Rumelhart). So why is this happening now? And why is NVidia now the largest company in the world?So why is this happening now? And why is NVidia now the largest company in the world?

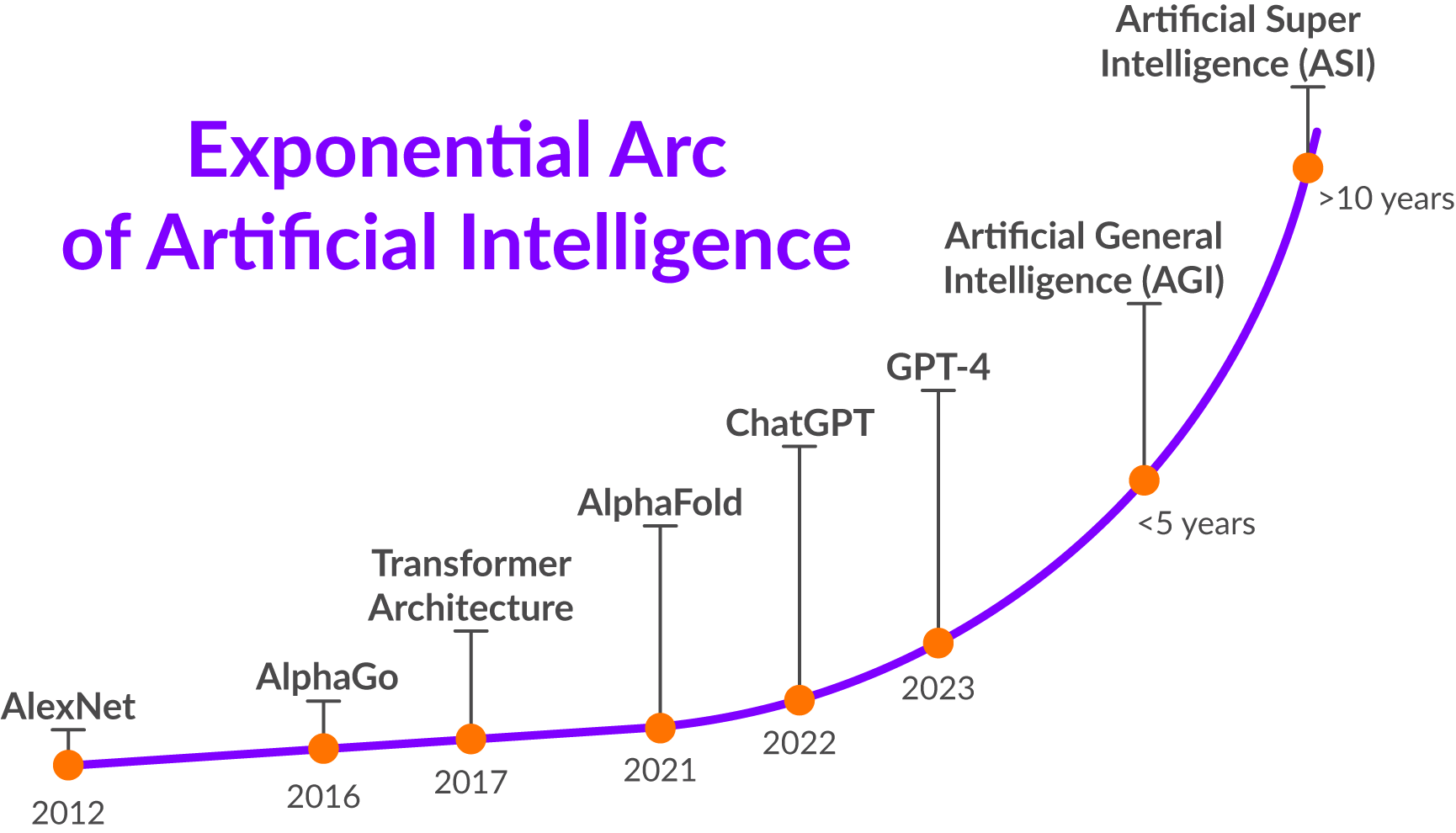

In 2012 researchers Alex Krizhevsky, in collaboration with Ilya Sutskever (the ex-chief Scientist of OpenAI) and Geoffrey Hinton (the ex-head of Google Brain) built a Neural Network for image recognition that was trained using GPUs -- NVidia GPUs. Since then, the field has focused on putting more data, and more GPUs behind bigger models.

Chinchillas and LLaMas

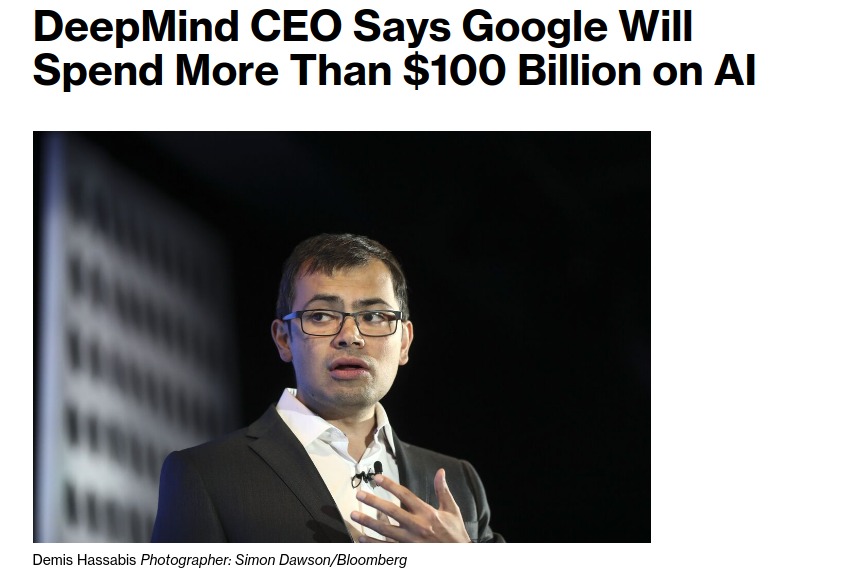

Chinchilla is a family of Large Language Models (LLMs) developed by DeepMind and presented in March 2022. The goal was to explore the effects of more data, more compute, and bigger neural networks. What happens if we increase each? Is there some limit? Will we continue to get better performance? The researchers found that increasing compute, data, and model size (in tandem) should pretty much always lead to better results . What does that mean exactly?

Bigger is Better

That means that the industry doesn't really need to worry about fundamental breakthroughs in AI techniques, they just need to throw more data, more compute and more bigger models at the problem.

"Microsoft, Meta, and Google’s parent company, Alphabet, disclosed this week that they had spent more than $32 billion combined on data centers and other capital expenses in just the first three months of the year. " - WSJ

"Microsoft (MSFT.O), and OpenAI are working on plans for a data center project that could cost as much as $100 billion and include an artificial intelligence supercomputer called ‘Stargate’" - Reuters

Where does it end?

No one knows if there is a limit to the Transformer architecture. Some people like LeCun of Meta argue that this architecture is fundamentally limited because they lack fundamental understanding and reasoning abilities. Obviously, Google, Microsoft and others are willing to bet hundreds of billions of dollars to that we aren't close to the limits of the architecture.

AGI / ASI

No one knows exactly the capacities of LLMs and if they can lead to Artificial General Intelligence or Artificial Super Intelligence. Mostly because we don't understand how they work internally. We are still poking the LLMs with sticks, like confused chimpanzees, hoping to gain some understanding (Towards Monosemanticity: Decomposing Language Models With Dictionary Learning https://transformer-circuits.pub/2023/monosemantic-features ). I guess we will soon find out.